Are you not getting the desired results out of your A/B test experiments? Wondering if there are ways to improve your split tests for better outcomes?

A lot of businesses make A/B testing mistakes that cost them their time and money, mostly because they don’t know how to run them correctly.

A/B testing is an amazing way to improve your website conversions. At OptinMonster, we have seen many customers use split testing to achieve significant results:

- AtHoc used A/B testing to improve conversions by 25%.

- ChinaImportal used it to grow its email list by 200%.

- Logic Inbound used split tests to increase its conversions by 1500%.

But if you’re making one of the common split testing mistakes listed below, your split tests might be doing more harm than good.

Qubit says that poorly done split tests can cause businesses to invest in unnecessary changes and can even hurt their profits.

The truth is, there’s a lot more to A/B testing than just setting up a test. To succeed with A/B test results, you’ll need to run them the right way and avoid the errors that can undermine your outcomes.

In this post, I’ll share the most common A/B testing mistakes many businesses make so you can avoid them. You’ll also learn how to use split testing the right way so that you can discover the hidden strategies that can skyrocket your conversions.

Let’s get started!

13 Most Common A/B Testing Mistakes You Should Avoid

Here’s the list of topics I’ll cover in this post. You can use this table of contents to click on a topic that you’re most interested in to jump directly to that section:

- Split Testing the Wrong Page

- Having an Invalid Hypothesis

- Split Testing Too Many Items

- Running Too Many Split Tests at Once

- Getting the Timing Wrong

- Working with the Wrong Traffic

- Testing Too Early

- Changing Parameters Mid-Test

- Measuring Results Inaccurately

- Using Different Display Rules

- Running Tests on the Wrong Site

- Giving Up on Split Testing

- Blindly Following Split Testing Case Studies

1. Split Testing the Wrong Page

One of the biggest problems with A/B testing is running your tests on the wrong pages. It’s important to avoid wasting time and valuable resources with pointless split testing.

But how do you know which pages to test? If you’re marketing a business, the answer is easy: the best pages to test are the ones that make a difference in conversions and sales.

Hubspot suggests that the best pages to test are the ones that get the most traffic, such as:

- Home page

- About page

- Contact page

- Pricing page

- Blog page

Product pages are especially important for eCommerce sites to test because those tests can give you data on how you can improve your sales funnel.

Simply put, if a page isn’t part of your sales or digital marketing strategy, there’s no point testing it. You can prioritize a page for your split testing experiments once you start noticing a spike in organic traffic or an improved engagement rate.

If making a change on a page won’t affect the bottom line, move on. Instead, test a page that’ll give you immediate results.

2. Having an Invalid Hypothesis

A common A/B testing pitfall to avoid is not having a valid hypothesis.

An A/B testing hypothesis is a theory about why you are getting particular results on a web page and how you can improve those results.

Let’s break this down a little more. To form a hypothesis, you’ll need to consider the following steps:

- Step 1: Pay attention to whether people are converting on your site. You’ll get this information from analytics software that tracks and measures what people do on your site. For example, it’ll tell you which call to action (CTA) buttons are getting more click-throughs, if people are signing up for your newsletter, or which product is selling the most.

- Step 2: Make calculated guesses about why certain behaviors are happening. For example, if people are landing on your site but aren’t downloading a lead magnet, or if your checkout page has a high bounce rate, then perhaps your CTA is to blame.

- Step 3: Come up with possible ideas that can lead to desirable user behavior. For example, in the above scenario, you can create multiple versions of CTAs to improve your lead magnet downloads.

- Step 4: Figure out how you will measure the success of the test. This is an essential part of a successful A/B testing hypothesis.

Here’s how you can work it out in a real-life scenario:

- Observation: There’s good traffic to our lead magnet landing page, but the conversion is low. Most visitors to the page aren’t downloading the lead magnet.

- Possible reasons: The low conversion rates might be because the existing CTA isn’t clear and compelling enough.

- Suggested fix: We’ll change the CTA button colors and texts to make it more compelling and visually pleasing.

- Measurement: We’ll know we’re right if we increase sign-ups by 10% in a 1-month period from the test launch date.

Note that you need all the elements for a valid hypothesis: observing data, speculating about reasons, coming up with a theory for how to fix it, and measuring results after implementing a fix.

If the test doesn’t yield satisfactory results, that means one of the variables was wrong. To succeed, you’ll need to change it and iterate the experiment until you get a conclusive result.

3. Split Testing Too Many Items

Here’s another common mistake in A/B testing that many marketers make: trying to test too many items at once.

It might seem like testing multiple page elements at once saves time, but it doesn’t. What happens is that you’ll never know which change is responsible for the improved (or poor) results.

Good split testing involves changing one item on a page and testing it against another version of the same item.

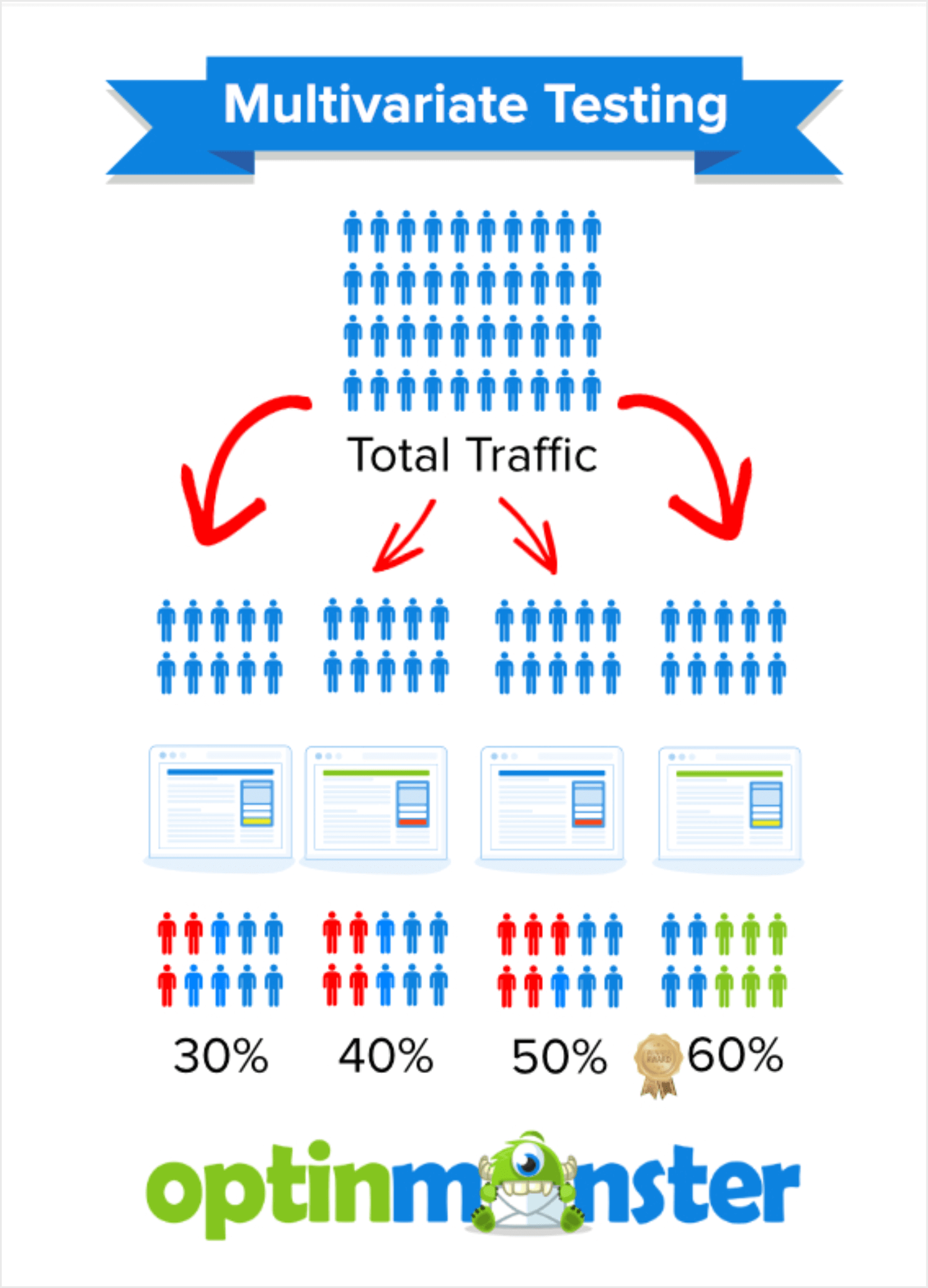

The minute you change more than one item at a time, you need a multivariate test. A multivariate test lets you compare more than 2 variations of a campaign at a time.

You can read more about it in detail in our comparison of split testing versus multivariate testing.

Here’s a simple visual explanation of how a multivariate test works:

Multivariate testing is a great way to test a website redesign where you have to change lots of page elements. But you can end up with a lot of combinations to test, and that takes time you might not want to invest.

Multivariate testing also only works well for high-traffic sites and pages. In most cases, however, a simple split test will get you the most meaningful results.

4. Running Too Many Split Tests at Once

When it comes to running A/B tests, it’s important to keep things simple.

While it’s perfectly fine to run multiple split tests, it’s not advisable to run more than 4 split tests at a time.

For example, you can get meaningful results by testing 3 different versions of your CTA button. Running these tests isn’t the same as multivariate testing, because you’re still only changing a single item for each test.

But the more variations you run, the bigger the A/B testing sample size you need. That’s because you have to send more traffic to each version to get reliable results.

This is known as A/B testing statistical significance. In simple terms, it means making sure the numbers are large enough to actually have meaning.

You can check if you have a good enough sample size for your tests with the tools mentioned in our split testing guide.

5. Getting the Timing Wrong

Timing is everything with A/B testing. But there are a couple of classic mistakes people make related to running a split test at the wrong time.

Comparing Different Time Periods

Let’s understand this with an example. If a page gets most of its traffic on Wednesdays, it doesn’t make sense to compare the test results for that day with the results on a low-traffic day.

This is an important point to remember if you’re an eCommerce retailer. That’s because it can be misleading to compare your test results from Thanksgiving or Christmas with the results you get during January’s sales slump.

It’s also important to pay attention to external factors that might affect your split testing results. If you’re marketing locally and natural disasters cause disruptions in people’s lives, you won’t get the results you expect.

Similarly, winter-related offers just won’t have the same impact during summer months, according to Small Business Sense.

In any case, you shouldn’t compare apples to oranges if you want reliable results. Instead, run your tests for comparable periods so you can accurately assess whether any change yields different outcomes.

Not Running a Test for Long Enough

You need to run an A/B test for a certain amount of time to achieve statistical significance.

As an example, you should aim to get a 95% confidence rating in your results as an industry standard. That means you can be sure about accurate results and base your decision-making on the findings.

The test duration can vary depending on the number of variants and the expected conversions. For instance, if you’re running 2 variants and expect 50 conversions, your testing period will be shorter than if you have 4 variants and are looking for 200 conversions.

Want to check if you have achieved statistical significance in your A/B tests? Head over to Visual Website Optimizer (VWO)’s Statistical Significance Calculator page.

The page allows you to enter the number of visitors and conversions for your test so that it can calculate the statistical significance.

Testing Different Time Delays

When you’re running split tests with OptinMonster, your campaign’s timing can affect the success of your tests. One of the frequent mistakes I see people make in their tests is that they compare 2 different campaigns with different time delays.

For example, if a popup campaign appears after visitors have been on a landing page for 5 seconds, and another shows up after 20 seconds, that’s not a fair comparison.

That’s because you’re not comparing similar audiences. Typically, a lot more people will spend 5 seconds on a page than 20 seconds.

As a result, you’ll see different impressions for each campaign. And the results won’t make sense or be useful to you.

For a true split test, you have to change 1 item on the page, not the timing. But if you want to experiment with timing your optins, this article on popups, welcome gates, and slide-in campaigns has some great suggestions.

6. Working With the Wrong Traffic

To get meaningful results out of your A/B tests, it’s important that your site drives a healthy amount of traffic to its pages.

If you have a high-traffic site, you can complete split tests relatively fast because of the constant flow of visitors to your site.

But if you have a low-traffic site or sporadic visits, you’ll need to run the test for longer.

It’s also important to split your traffic the right way so you really are testing like against like. Some A/B testing tools allow you to manually allocate the traffic you are using for the test.

But it’s much easier to split traffic automatically to avoid the possibility of getting unreliable results from the wrong kind of split.

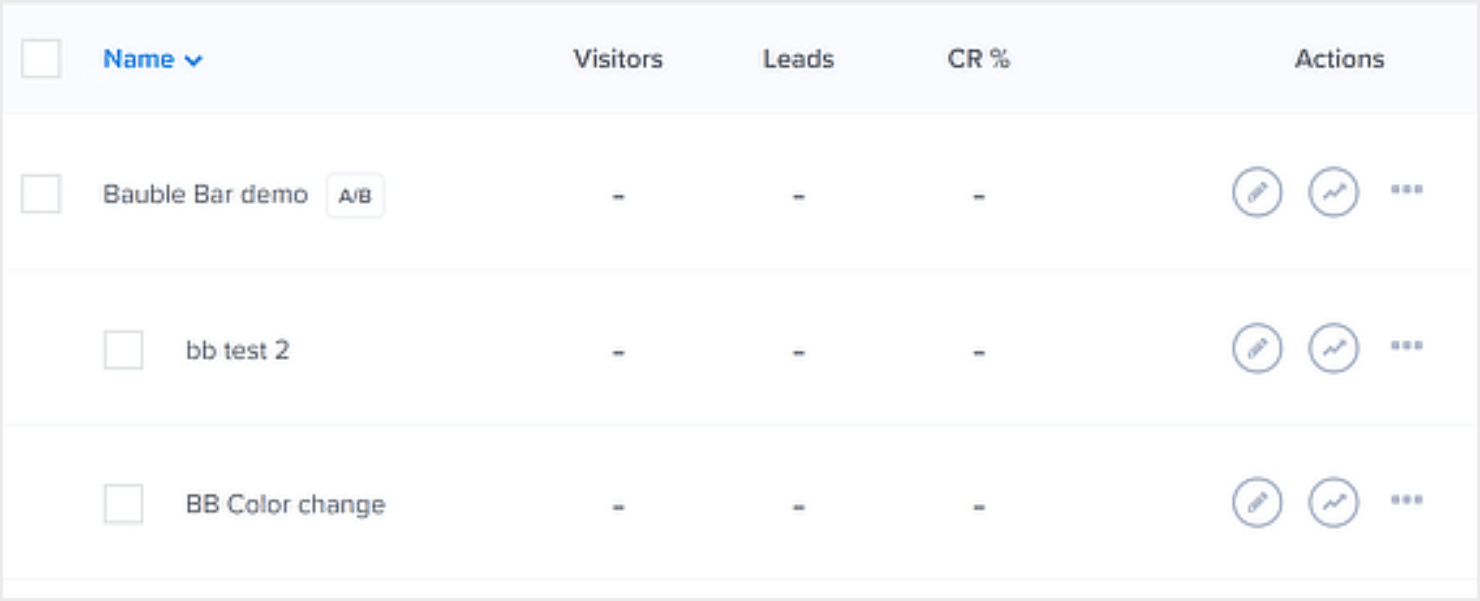

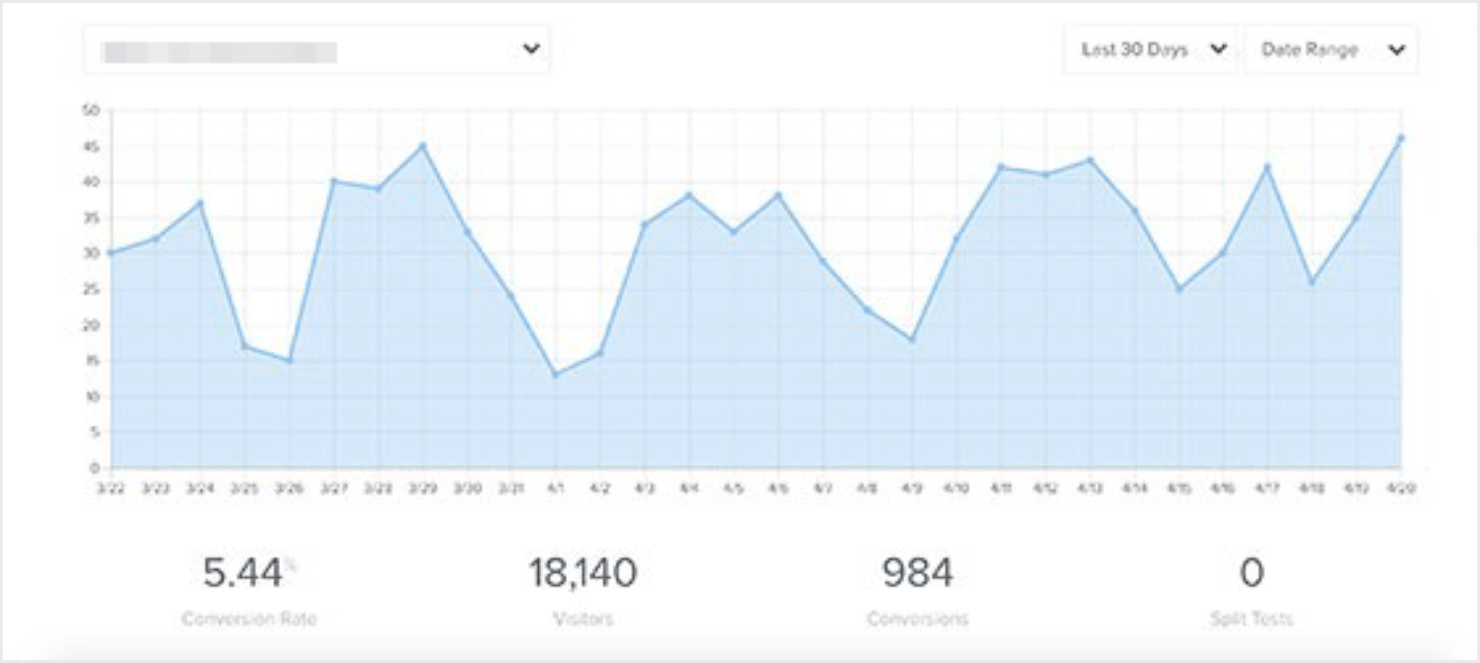

If you’re using OptinMonster’s A/B testing functionality, it’s easy to get this right. That’s because OptinMonster automatically segments your traffic according to the number of tests you’re running:

7. Testing Too Early

Testing too soon is a recipe for failure in A/B testing and can cloud your marketing judgment.

For example, if you start a new OptinMonster campaign, you should wait a bit before starting a split test.

That’s because there’s no point in creating a split test when you don’t have enough data to compare. When you rush to test brand-new pages, you’ll essentially be testing against nothing, which is a waste of time.

As a rule of thumb, run your new campaigns for at least a week and see how they perform before you start tweaking and testing.

Like I mentioned earlier in tip #5, the more conversions you want, the longer your test duration should be.

8. Changing Parameters Mid-Test

If you want to mess up your A/B testing outcome, change your testing parameters or variables in the middle of the experiment.

This mistake usually happens if you:

- Decide to change the amount of web traffic that sees the control or the variation.

- Add new variables or change the existing ones before the test ends.

- Alter your split testing goals.

Sudden changes invalidate your test and skew your results.

If you absolutely need to change something, then start your test again, or create a new one. It’s the best way to get results you can rely on.

9. Measuring Results Inaccurately

Measuring results correctly is as important as testing accurately. Yet, it’s another area for marketers to make costly A/B testing mistakes.

If you don’t measure results properly, you can’t rely on your data. This means you can’t make data-driven decisions and finetune your marketing campaigns for better results.

One of the best ways to solve this is to ensure that your A/B testing solution works with Google Analytics. That way, you’ll have better control over understanding your test results and actionable insights on what to do next.

OptinMonster integrates with Google Analytics, so you can see accurate data about traffic and conversions in your dashboard:

Here’s how you integrate Google Analytics with OptinMonster so you can get real-time, actionable data points. You can also set up your own Google Analytics dashboard to collect campaign data with the rest of your web metrics.

10. Using Different Display Rules

Display rules, in the context of marketing campaigns, are a set of conditions that let you control where and how your campaigns appear to visitors on your site.

OptinMonster has powerful display rules that control which campaigns show when, where, or based on specific user behaviors. These rules can include parameters such as:

- If someone is visiting your site for the first time.

- If they are returning visitors.

- The time they spend on a page.

- Certain pages they visit on your site.

- The devices they are using to visit your site.

- Their intention to leave a page.

- Their geographical location.

- The length of a page they have scrolled on your site.

- The amount of time they are inactive on a page.

- If they have added a product to their shopping carts.

- If they have already seen or interacted with an existing campaign.

Just like you shouldn’t randomly change your testing parameters midway through a test, making arbitrary changes to the display rules can ruin your test outcomes.

Like I said earlier, split tests are about changing one element on the page. If you change the display rules so that one optin shows to people in the UK and another to people in the US, that’s not an apples-to-apples comparison.

If one campaign is a fullscreen welcome mat, and another is an Exit-Intent® campaign, you’re setting up your test for failure. If one campaign shows up at 9 am and the other at 9 pm, you might get false positives that can jeopardize your marketing efforts in the future.

For more information, check out our guide to using Display Rules with OptinMonster. This will help you set rules for who to target your campaigns at, where, and at what time.

11. Running Tests on the Wrong Site

This is one of the silliest A/B testing mistakes marketers fall for. Many of them test their marketing campaigns on a development site, which is not so bad by itself.

What’s worse is that sometimes they forget to move their chosen campaigns over to the live site and they see that their split tests aren’t working.

This is bad because the only people who visit a development site are web developers, not customers. Luckily, making the switch is an easy fix. If you’re not seeing positive results, it’s worth checking for this issue.

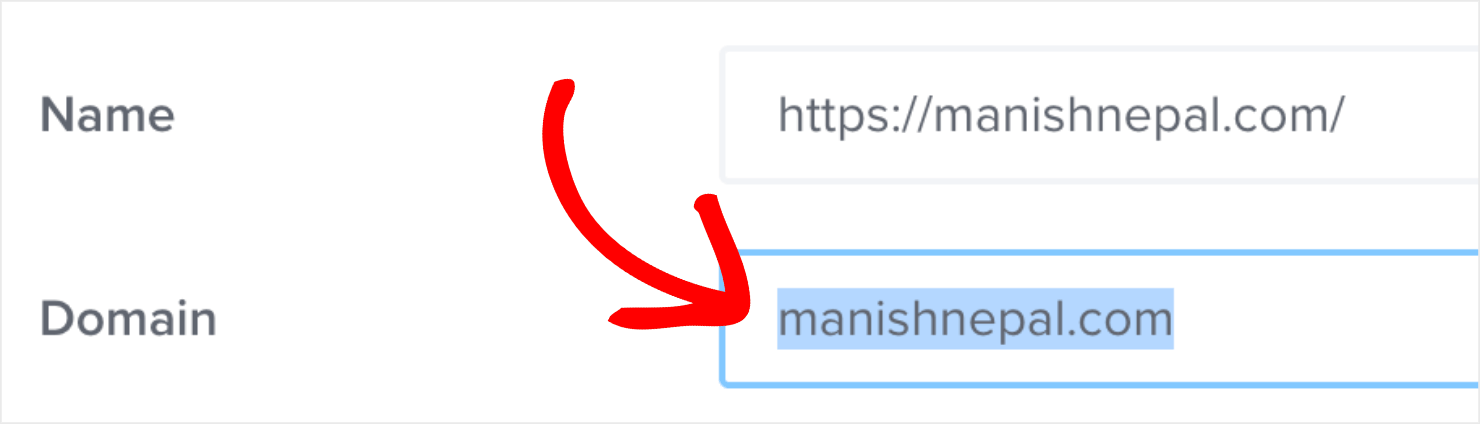

If you’re using OptinMonster, here’s how you fix this issue:

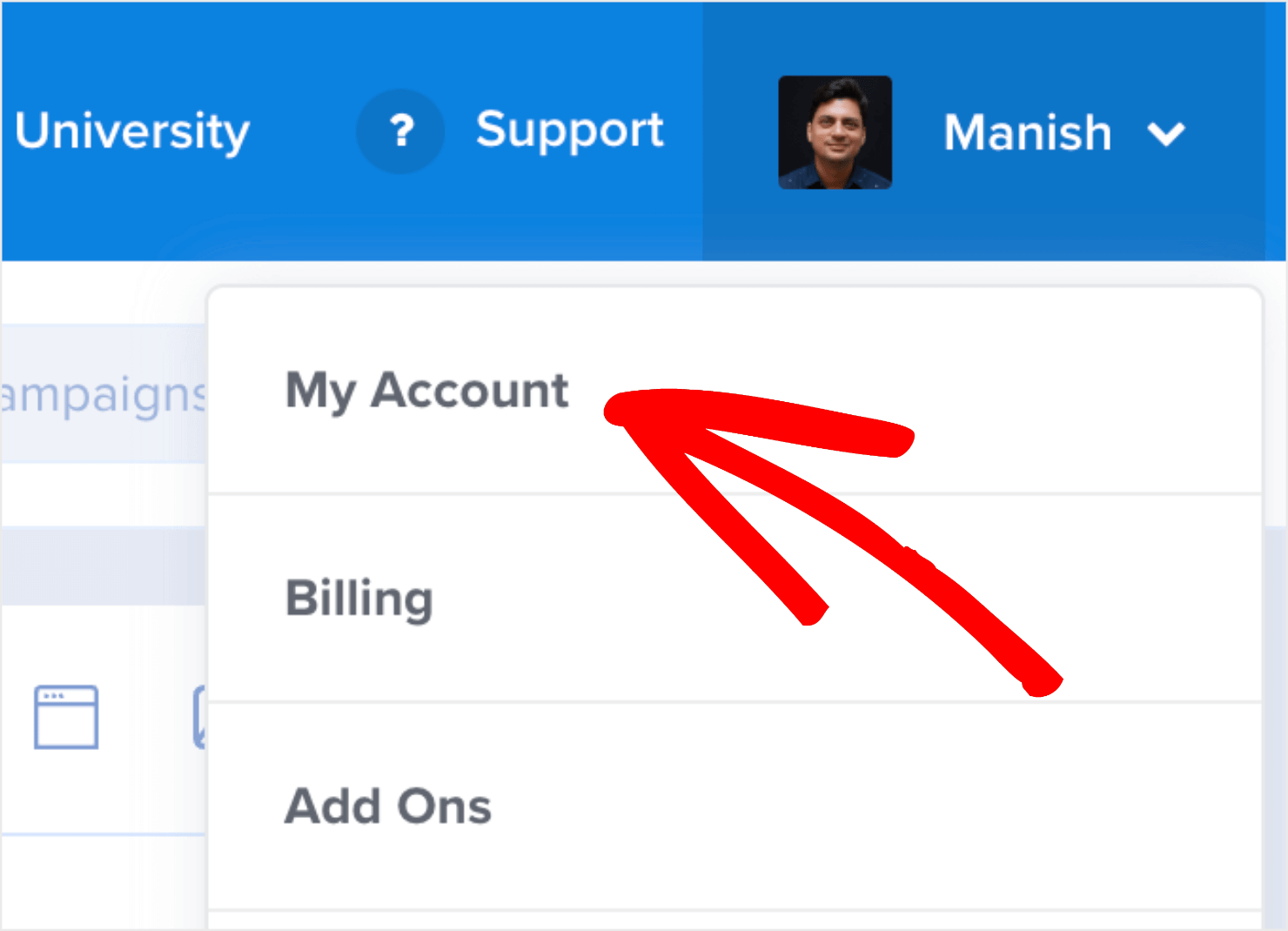

Step 1: Log in to the OptinMonster dashboard, and click on your profile icon at the top-right of the screen. From the dropdown menu, click on My Account:

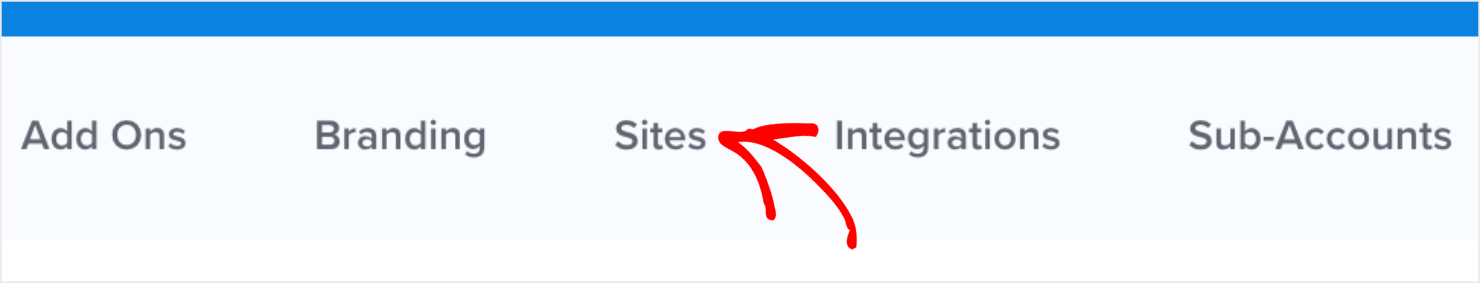

Go to Sites from the top menu:

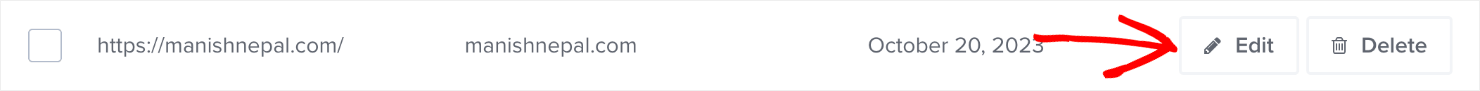

Choose the site you want to change and click Edit:

Change the website URL from the dev site to the live site:

Once you’re done, scroll down to the page’s bottom to save your changes:

And that’s it!

To learn more, read our help doc on how to add, delete, or edit a website in OptinMonster.

12. Giving Up on Split Testing

Some brands keep testing their sites, which means they are always running conversion rate optimization (CRO) tests to improve their site’s user experience (UX). Others, not so much.

One of the A/B testing mistakes you must avoid is ending your test prematurely. For example, don’t stop your test before the 1-week time window that I mentioned earlier. You should also not stop a test halfway through assuming it has failed or if it’s not giving you the desired results.

Quitting on a test too soon is a terrible error because it won’t get optimization benefits.

It’s important to know that there’s no such thing as a failed split test because the goal of a test is to gather data.

You can learn something from an experiment even if it doesn’t yield the outcomes you expected. Plus, the data you’ll discover might help you fine-tune your next experiment.

You should also go with what the data says rather than relying on your gut feeling, also known as confirmation bias. You can always run a new test at the end of the testing period to see if different changes will achieve the results that you hoped for.

Also, don’t stop the test abruptly if you haven’t had enough time to get a decent sample size or achieve statistical significance and a 95% confidence rating. Otherwise, you’ll waste your time.

13. Blindly Following Split Testing Case Studies

It’s great to read case studies and learn about the split testing techniques that have worked for different companies.

But one A/B testing mistake you must avoid is copying what worked for others.

If that seems counterintuitive, hear me out.

It’s fine to use case studies to get ideas for how and what to test. But be aware that what worked for another business might not work for yours, because every business is unique.

Instead, use A/B testing case studies as a point of reference for creating your own A/B testing strategy. That’ll let you see what works best for your own target audience, not someone else’s.

Put Your A/B Tests to Good Use

Now you know the most common A/B testing mistakes that can waste your time and marketing efforts, you can get your split testing off to a good start.

If you liked this post, you might be interested in more resources related to A/B testing:

- What Is Split Testing? Best Split Testing Tools & Strategies

- Handpicked A/B Testing Examples to Get You More Conversions

- How to Split Test Email Campaigns the RIGHT Way [Ultimate Guide]

OptinMonster makes it really easy to split test your marketing campaigns so you can get better results.

Want to see how easy (and error-free) it is to create and test your campaigns? Sign up with OptinMonster today!

Disclosure: Our content is reader-supported. This means if you click on some of our links, then we may earn a commission. We only recommend products that we believe will add value to our readers.